Developing the application

In writing an ARToolKit application the following steps must be taken:

1. Initialize the video path and read in the marker pattern files and camera parameters.

2. Grab a video input frame.

3. Detect the markers and recognized patterns in the video input frame.

4. Calculate the camera transformation relative to the detected patterns.

5. Draw the virtual objects on the detected patterns.

6. Close the video path down.

Steps 2 through 5 are repeated continuously until the application quits, while steps 1 and 6 are just performed on initialization and shutdown of the application respectively. In addition to these steps the application may need to respond to mouse, keyboard or other application specific events.

To show in detail how to develop an application we will step through the source code for the simpleTest program. This is found in the directory examples/simple/ The file we will be looking at is simpleTest.c. This program simply consists of a main routine and several graphics drawing routines. The main routine is shown below:

int main (int argc, char **argv)

{

glutInit (&argc, argv);

init();

arVideoCapStart();

argMainLoop (NULL, keyEvent, mainLoop);

return (0);

}

This routine calls an init initialization routine that contains code for initialization of the video path, reading in the marker and camera parameters and setup of the graphics window. This corresponds to step 1 above.

Next, the function arVideoCapStart() starts video image capture.

Finally, the argMainLoop function is called which starts the main program loop and associates the function keyEvent with any keyboard events and mainLoop with the main graphics rendering loop. The definition of argMainLoop is contained in the file gsub.c that can be found in the directory lib/Src/Gl/

In simpleTest.c the functions which correspond to the six application steps above. The functions corresponding to steps 2 through 5 are called within the mainLoop function.

Recognizing different patterns

The simpleTest program uses template matching to recognize the different patterns inside the marker squares. Squares in the video input stream are matched against pre-trained patterns.

These patterns are loaded at run time and are contained in the Data directory of the bin directory. In this directory, the text file object_data specifies which marker objects are to be recognized and the patterns associated with each object. The object_data file begins with the number of objects to be specified and then a text data structure for each object. Each of

the markers in the object_data file are specified by the following structure:

Name

Pattern Recognition File Name

Width of tracking marker

For example the structure corresponding to the marker with the virtual cube is:

#pattern 1

cone

Data/hiroPatt

80.0

Note that lines beginning with a # character are comment lines and are ignored by the file reader. In order to change the patterns that are recognized the sampPatt1 filename must be replaced with a different template file name.

These template files are simply a set of sample images of the desired pattern. The program to create these template files is called mk_patt and is contained in the bindirectory. The source code for mk_patt is in the mk_patt.c file in the util directory.

ARToolkit tutorial for beginersDownload latest version ARToolkit 2.7.2.1

Rujuk Documentation provided in HitLab website for more information/additional note.

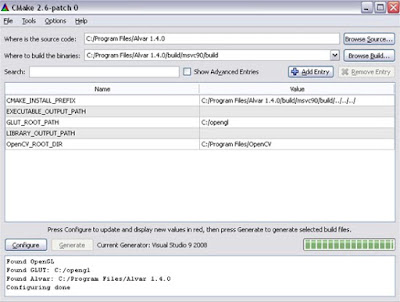

Must know how to configure and setting up ARToolkit – set directories “Option properties” visual studio pada linker, include dll, path.

Download Tutorial 1 (.pdf)

Download Tutorial 2 (.pdf)